html

html

E-commerce scraping with Selenium? What I learned.

Why E-commerce Web Scraping Matters

In today's competitive e-commerce landscape, understanding your market is crucial. We're not just talking about gut feelings anymore; we're talking about data. E-commerce web scraping is the process of automatically extracting data from e-commerce websites. It's like having a team of virtual assistants constantly monitoring prices, product details, and availability for you. This provides a significant competitive advantage.

Think about it: you can track competitor pricing in real-time, allowing you to adjust your own prices dynamically. You can monitor product availability to ensure you're always in stock and avoid losing sales. You can even analyze customer reviews to understand what people love (and hate) about your products and your competitors' products.

Beyond these immediate benefits, e-commerce data scraping empowers you to identify emerging market trends, improve sales forecasting, and personalize your customer experience. It's the key to making informed decisions and staying ahead of the curve.

And what if you could get all of this market research data without having to learn a complex programming language? While understanding programming definitely opens doors, we'll discuss options later for those looking to scrape data without coding!

What Can You Scrape From E-commerce Sites?

The possibilities are vast, but here are some common and valuable types of data you can extract:

- Product Prices: Track price fluctuations over time, identify competitors' pricing strategies, and optimize your own pricing.

- Product Descriptions: Analyze product features, identify keywords, and improve your own product listings.

- Product Images: Gather visual data for analysis, competitor comparison, and content creation.

- Product Reviews: Understand customer sentiment, identify pain points, and improve product quality or customer service. This helps greatly with sentiment analysis.

- Product Availability: Monitor stock levels, identify out-of-stock products, and optimize inventory management.

- Product Ratings: Get an overview of customer satisfaction and identify top-rated products.

- Product Categories: Understand product organization and identify niche markets.

- Shipping Information: Analyze shipping costs and delivery times to optimize your own shipping strategies.

- Promotions and Discounts: Track promotional offers and discounts to identify competitor strategies and optimize your own promotions.

Is Web Scraping Legal and Ethical?

This is a crucial question, and it's important to approach web scraping responsibly. While web scraping itself isn't inherently illegal, how you do it and what you do with the data matters. Always, *always* respect the website's terms of service (ToS) and robots.txt file.

The robots.txt file is a text file that websites use to instruct web robots (like web scrapers) about which parts of their site should not be accessed. You can usually find it by adding /robots.txt to the end of the website's URL (e.g., www.example.com/robots.txt). Pay attention to the "Disallow" directives.

Here are some key ethical considerations:

- Respect the

robots.txtfile: Don't scrape pages that are disallowed. - Don't overload the server: Implement delays between requests to avoid overwhelming the website's server. Be a considerate digital citizen.

- Use the data responsibly: Don't use scraped data for malicious purposes, such as spamming or price gouging.

- Give credit where it's due: If you're using scraped data in a public report or analysis, acknowledge the source.

- Comply with copyright laws: Be careful about scraping copyrighted material, such as images or text.

- Terms of Service: Many websites explicitly prohibit web scraping in their Terms of Service. Check these carefully before starting a project.

In short: be respectful, transparent, and avoid causing harm to the website owner or its users. If in doubt, consult with a legal professional.

Tools of the Trade: Python Web Scraping with Scrapy

Python is a popular language for web scraping due to its ease of use and powerful libraries. One of the most powerful frameworks for python web scraping is Scrapy.

Scrapy is a high-level web scraper framework that provides a structured and efficient way to extract data from websites. It handles many of the complexities of web scraping, such as handling requests, parsing HTML, and managing data pipelines.

Here's a simple example of how to use Scrapy to scrape product names and prices from an e-commerce website (let's pretend it's called "ExampleShop"):

import scrapy

class ExampleShopSpider(scrapy.Spider):

name = "exampleshop"

start_urls = ['https://www.exampleshop.com/products'] # Replace with the actual URL

def parse(self, response):

for product in response.css('div.product'): # Replace with the actual CSS selector

yield {

'name': product.css('h2.product-name::text').get(), # Replace with the actual CSS selector

'price': product.css('span.product-price::text').get(), # Replace with the actual CSS selector

}

# To run this, you'd typically save this as a Python file (e.g., exampleshop_spider.py)

# and then run it from the command line using:

# scrapy crawl exampleshop -o output.json

Let's break down this code:

import scrapy: Imports the Scrapy library.class ExampleShopSpider(scrapy.Spider):: Defines a spider class that inherits fromscrapy.Spider.name = "exampleshop": Assigns a name to the spider. This is how you'll call it from the command line.start_urls = ['https://www.exampleshop.com/products']: Specifies the URLs that the spider will start crawling from. Important: Replace this with the actual URL of the e-commerce website you want to scrape.def parse(self, response):: Defines the parsing function that will be called for each page that the spider crawls.for product in response.css('div.product'):: This line uses CSS selectors to find all the elements on the page that represent individual products. Important: You'll need to inspect the HTML of the target website to determine the correct CSS selector to use. Use your browser's developer tools (usually accessed by pressing F12) to examine the HTML structure.yield {'name': product.css('h2.product-name::text').get(), 'price': product.css('span.product-price::text').get(),}: This line extracts the product name and price from each product element using CSS selectors and stores them in a dictionary. Again, Important: You'll need to inspect the HTML to determine the correct CSS selectors. The::textselector extracts the text content of the selected element, and.get()retrieves the first matching result.yieldmakes this a generator, which is more efficient for large scrapes.

How to run this code:

- Install Scrapy:

pip install scrapy - Save the code: Save the Python code as a file (e.g.,

exampleshop_spider.py). - Run the spider: Open a terminal or command prompt, navigate to the directory where you saved the file, and run the following command:

scrapy crawl exampleshop -o output.json - Examine the output: The scraped data will be saved in a JSON file named

output.json.

This is a very basic example, but it demonstrates the fundamental principles of web scraping with Scrapy. You can extend this code to extract more data, handle pagination, and implement more sophisticated scraping techniques.

Important Considerations:

- CSS Selectors: Getting the correct CSS selectors is *the* critical part. Take your time and learn how to use your browser's developer tools effectively.

- Website Structure: Websites change. Your spider might work perfectly today and break tomorrow if the website's HTML structure changes. Be prepared to maintain your spiders.

- Error Handling: Add error handling to your spider to gracefully handle unexpected situations, such as network errors or changes in the website's structure.

- Rate Limiting: Implement delays between requests to avoid overwhelming the website's server and getting blocked. Scrapy has built-in settings for this.

What About When Scrapy Isn't Enough? (Headless Browsers)

Sometimes, websites use JavaScript to dynamically load content. In these cases, Scrapy alone might not be sufficient, as it only fetches the initial HTML source code. That's where headless browser solutions like Selenium come in.

A headless browser is a web browser without a graphical user interface. It can execute JavaScript and render web pages just like a regular browser, but it does so in the background, without displaying anything on the screen. This allows you to scrape websites that rely heavily on JavaScript.

Selenium automates web browsers. It can simulate user interactions, such as clicking buttons, filling out forms, and scrolling through pages. This makes it a powerful tool for scraping dynamic websites.

However, Selenium is generally slower and more resource-intensive than Scrapy. It's best to use Scrapy whenever possible and only resort to Selenium when you need to scrape JavaScript-heavy websites.

Selenium is often paired with Beautiful Soup for parsing the rendered HTML.

No-Code Web Scraping? Is It Possible?

Absolutely! If you're not comfortable with coding, there are various web scraping software and tools that allow you to scrape data without coding. These tools typically provide a visual interface where you can select the data you want to extract and configure the scraping process. They often work by using browser extensions to 'record' your actions on the target webpage and then replay them to extract the data.

Examples of no-code web scraping tools include:

- ParseHub: A popular web scraping tool with a visual interface.

- Octoparse: Another popular tool with a range of features and pricing plans.

- WebHarvy: A desktop-based web scraping tool.

- Import.io: A cloud-based web scraping platform.

These tools are a great option for users who don't have programming experience or who need to quickly extract data from a website without writing code. However, they may have limitations compared to coding-based solutions, such as difficulty handling complex websites or less flexibility in customizing the scraping process.

Turning Scraped Data into Actionable Insights

Once you've scraped the data, the real work begins: turning that raw data into actionable insights. Here are some things you can do:

- Data Cleaning: Clean and format the data to ensure its accuracy and consistency. This might involve removing duplicates, correcting errors, and standardizing formats.

- Data Analysis: Analyze the data to identify trends, patterns, and anomalies. This might involve using statistical techniques, data visualization tools, or machine learning algorithms.

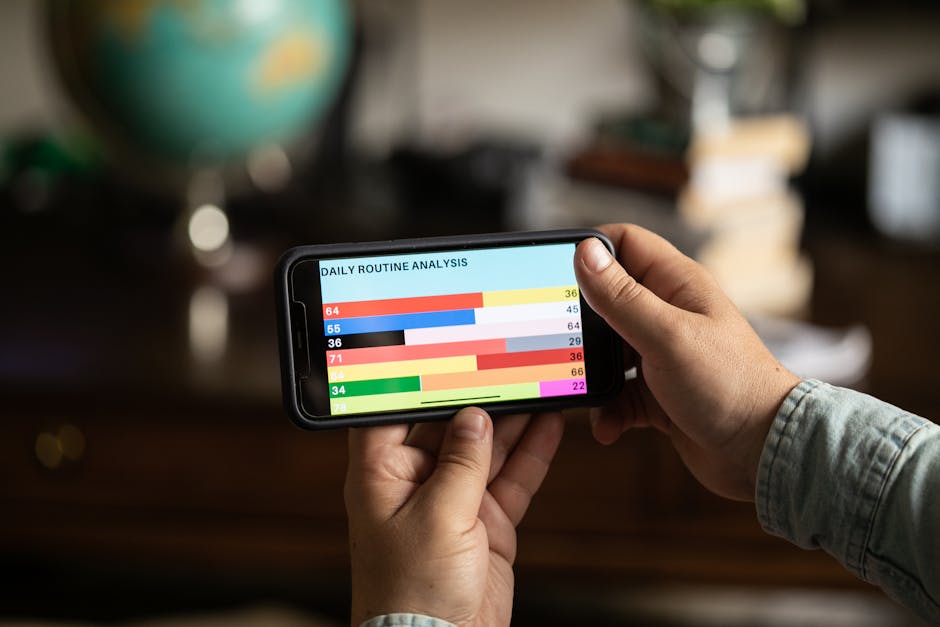

- Data Visualization: Create charts, graphs, and dashboards to communicate your findings effectively.

- Automated Reporting: Set up automated reports to track key metrics and monitor changes over time.

- Integration with Other Systems: Integrate the scraped data with your CRM, ERP, or other business systems to improve decision-making across your organization.

For example, if you're tracking competitor pricing, you can use the data to automatically adjust your own prices to maintain a competitive edge. If you're monitoring product reviews, you can use customer behaviour data to identify areas for product improvement.

The Future: Data as a Service (DaaS)

If you don't want to build and maintain your own web scrapers, you can leverage data as a service (DaaS) solutions. These services provide pre-scraped data on a subscription basis. This can be a cost-effective option for businesses that need access to large amounts of data but don't have the resources to build their own scraping infrastructure.

DaaS providers often specialize in specific industries or data types, such as e-commerce, finance, or real estate. They handle the complexities of web scraping, data cleaning, and data delivery, allowing you to focus on analyzing the data and using it to improve your business.

E-commerce Scraping: A Quick Checklist to Get Started

- Identify Your Needs: What data do you need? What websites do you need to scrape?

- Check Legality and Ethics: Review the website's ToS and

robots.txt. - Choose Your Tools: Python/Scrapy, Selenium, or a no-code scraping tool?

- Build (or Subscribe): Develop your scraper or choose a DaaS provider.

- Clean and Analyze: Process the data and extract meaningful insights.

- Automate: Set up automated scraping and reporting to ensure you always have the latest data.

- Iterate: Continuously monitor and improve your scraping process. Websites change!

Ready to Dive Deeper?

Web scraping can feel overwhelming at first, but breaking it down into manageable steps makes it approachable for everyone.

For more advanced tips, tutorials, and real-world examples, be sure to check out our other blog posts. And if you're looking for a comprehensive solution to your data needs, don't hesitate to contact us.

Ready to take your e-commerce business to the next level with the power of data?

Sign upContact: info@justmetrically.com

#WebScraping #Ecommerce #DataScraping #Python #Scrapy #DataAnalysis #MarketResearch #CompetitiveIntelligence #AutomatedDataExtraction #ProductMonitoring